QuantumQuill: A Transformer-Based Science Fiction Storyteller

Abstract

Abstract

Aim:

To design and implement an interactive transformer-based storytelling system capable of generating contextually rich, genre-specific science fiction narratives by fine-tuning multiple large language models (LLMs) on curated datasets of science fiction books and optimizing prompt engineering strategies.

Google Meet Link: https://meet.google.com/qys-easv-exr

Github Repository: https://github.com/jayanthhs05/QuantumQuill

Introduction:

The emergence of transformer architectures has revolutionized Natural Language Processing (NLP), enabling machines to generate human-like text. QuantumQuill explores this capability in the specialized domain of science fiction, where narrative coherence, world-building, and thematic depth are critical. Traditional story generation systems often produce generic or inconsistent outputs, but fine-tuned LLMs combined with structured prompting address these limitations by embedding genre-specific tropes and stylistic elements. This project bridges the gap between theoretical transformer mechanics and applied creative writing, demonstrating how attention mechanisms and positional encoding can simulate plot progression and character development in sci-fi contexts. We have developed an interactive web application that takes in a prompt, allows you to choose the fine-tuned LLM of your liking, and generates a science fiction story.

Literature Survey:

Text Preprocessing Techniques in NLP:

Before the widespread adoption of transformer-based models, natural language processing (NLP) relied heavily on robust preprocessing pipelines to convert raw, unstructured text into a format suitable for statistical and neural models. These preprocessing techniques were critical for improving model accuracy, reducing noise, and standardizing input across diverse datasets.

Key Preprocessing Techniques:

Noise Removal:

Removing unwanted characters such as punctuation, special symbols, HTML tags, and extra whitespace is essential. This step cleans the data, reducing irrelevant information that could negatively impact model performance.

Tokenization:

Tokenization splits text into smaller units called tokens, typically words or subwords. This is a foundational step, as most NLP models operate on tokens rather than raw text. Tokenization can be as simple as splitting on spaces or as complex as handling language-specific rules and punctuation.

Stop Word Removal:

Stop words are frequently occurring, semantically weak words (e.g., "the", "is", "and") that often do not contribute meaningful information for tasks like classification or sentiment analysis. Removing stop words reduces dimensionality and focuses the model on more informative terms.

Stemming and Lemmatization:

Stemming reduces words to their root form by removing suffixes (e.g., "running" to "run"), often using heuristic rules.

Lemmatization maps words to their base or dictionary form (lemma), considering context and part of speech (e.g., "better" to "good"). Lemmatization is generally more accurate but computationally intensive compared to stemming.

Text Segmentation:

Sentence and word segmentation break text into sentences and words, respectively. This is especially important for languages without explicit word boundaries or for tasks requiring sentence-level analysis.

Spelling Correction and Text Normalization:

Correcting misspelled words and normalizing slang, abbreviations, or elongated words (e.g., "hellooo" to "hello") improves consistency, especially in user-generated content or social media text.

Handling Missing Values:

Detecting and removing or imputing missing values ensures that the dataset is clean and complete, preventing errors during model training.

Parts of Speech (POS) Tagging:

Annotating words with their grammatical categories (noun, verb, adjective, etc.) can enrich the dataset and support more advanced tasks such as parsing or named entity recognition.

Best Practices and Tools:

Preprocessing steps are often tailored to the specific NLP task and dataset characteristics. For example, aggressive stemming might harm tasks requiring precise word forms, such as information extraction.

Popular libraries for preprocessing include NLTK and spaCy, which offer tools for tokenization, stemming, lemmatization, and more.

Transformers:

The Transformer architecture, introduced by Vaswani et al. in 2017, represents a significant advancement in the field of deep learning, particularly for natural language processing (NLP) tasks. Unlike earlier sequence models such as recurrent neural networks (RNNs) and long short-term memory networks (LSTMs), the Transformer relies entirely on self-attention mechanisms, enabling efficient parallel processing and improved modeling of long-range dependencies.

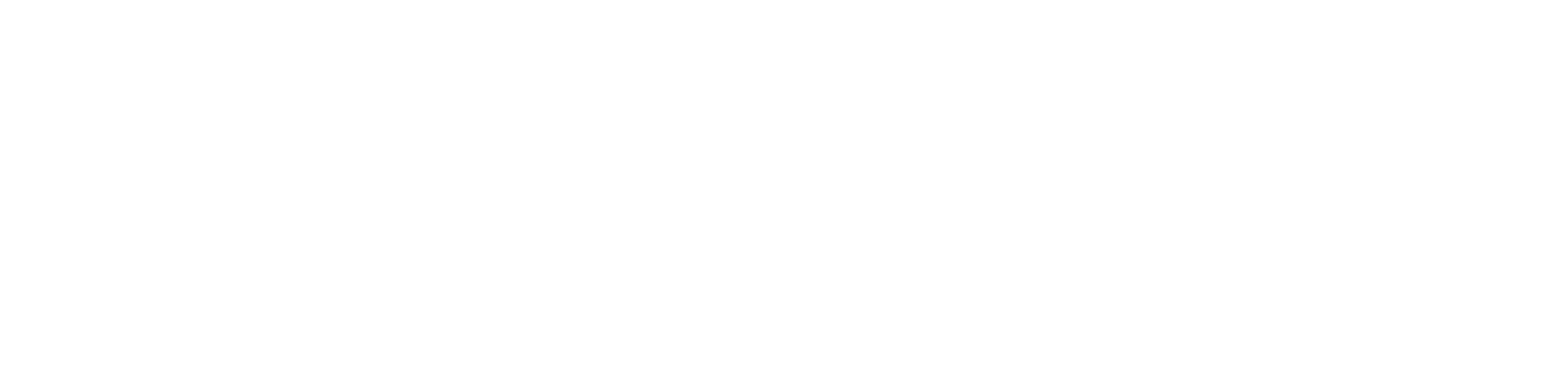

Transformer Architecture Overview:

The Transformer model is structured around an encoder-decoder framework. The encoder processes the input sequence and generates a set of contextualized representations, while the decoder utilizes these representations to generate the output sequence. Both encoder and decoder are composed of multiple identical layers, typically six each in the original design, though this number can be varied based on the application.

Key Components:

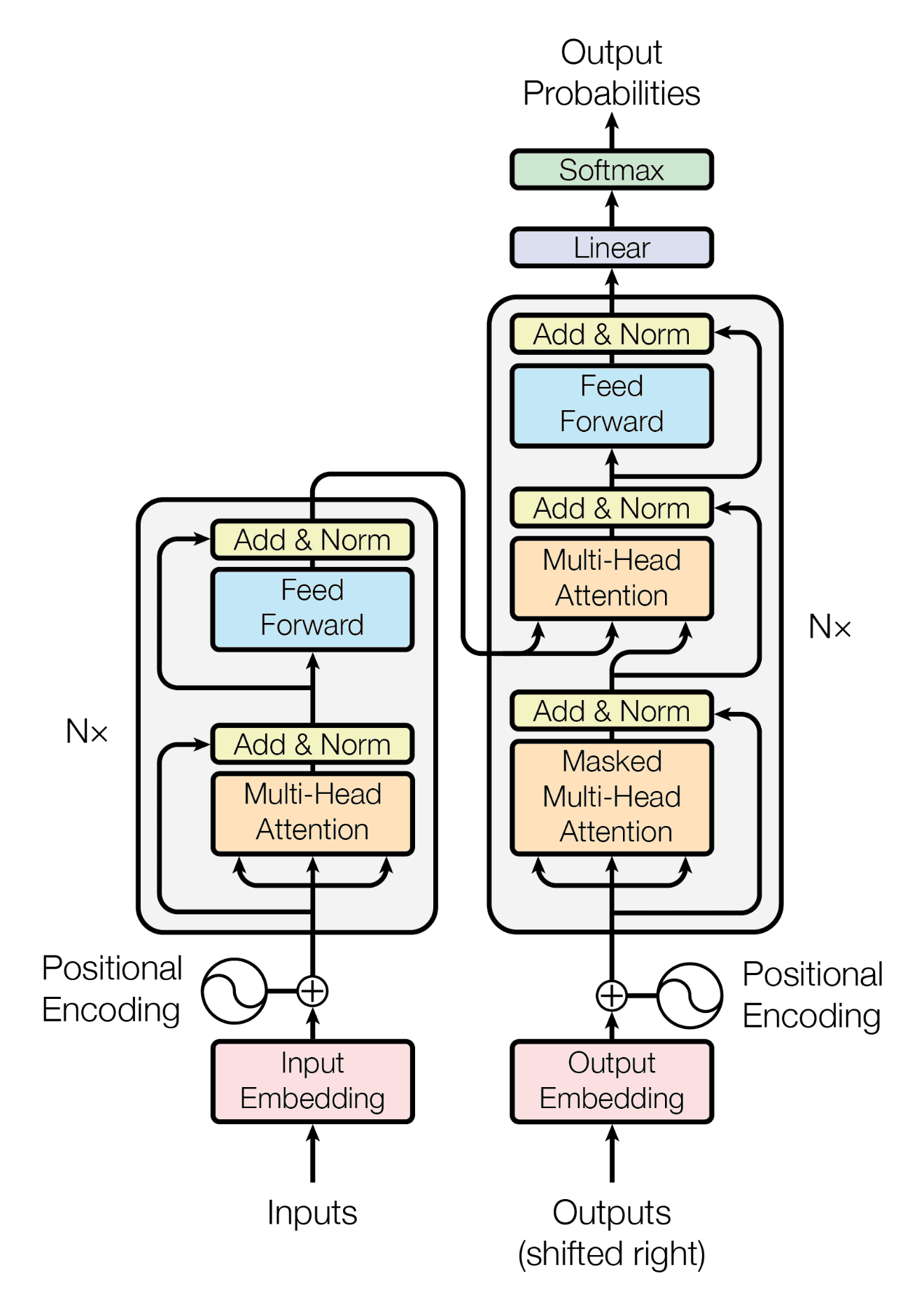

Input Embedding and Positional Encoding: Input tokens are first converted into embeddings, which are then augmented with positional encodings to retain information about the order of tokens, compensating for the model’s lack of inherent sequential structure.

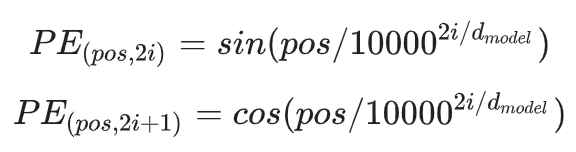

Encoder Stack: Each encoder layer contains a multi-head self-attention mechanism and a position-wise feed-forward neural network. Residual connections and layer normalization are applied around each sublayer to facilitate training and model stability.

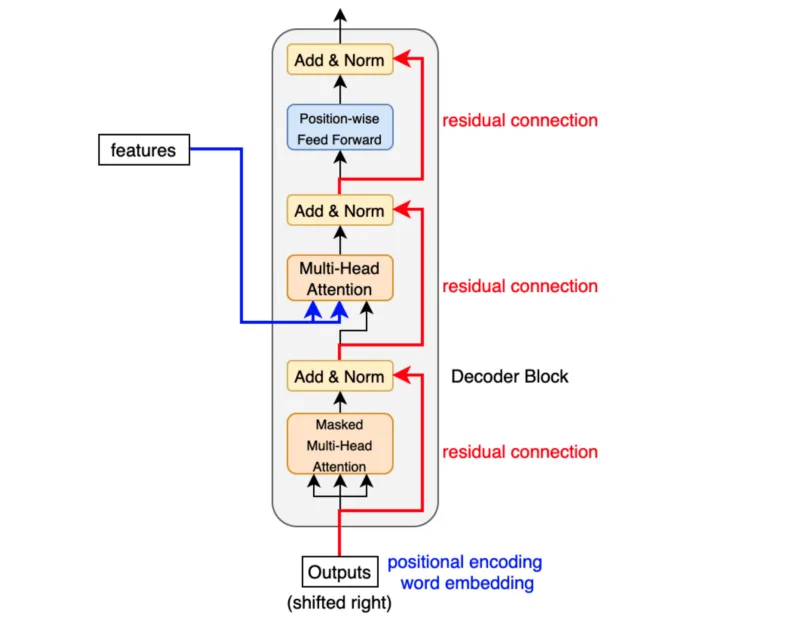

Decoder Stack: The decoder layers incorporate masked multi-head self-attention (to prevent information leakage from future tokens), encoder-decoder attention (to focus on relevant encoder outputs), and a feed-forward network, with residual connections and normalization as in the encoder.

Output Layer: The final decoder output is passed through a linear transformation and a softmax layer to generate a probability distribution over the vocabulary for the next token prediction.

Self-Attention Mechanism:

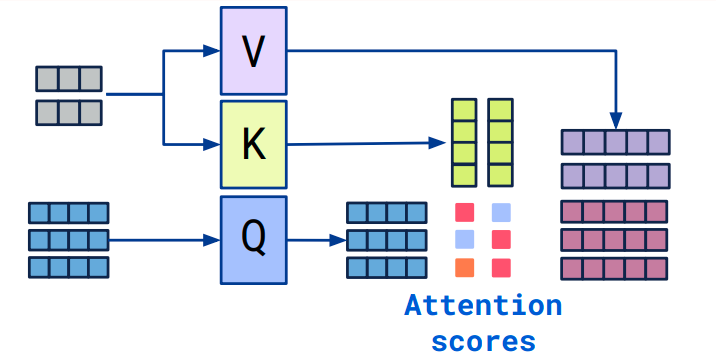

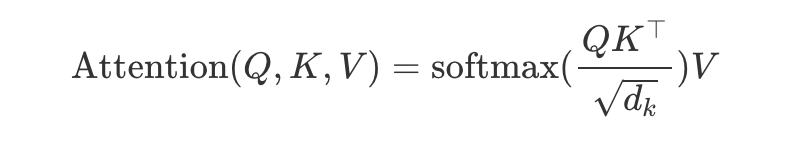

A core innovation of the Transformer is the self-attention mechanism. For each token, the model computes Query, Key, and Value vectors. The attention score for each pair of tokens is determined by the dot product of their Query and Key vectors, scaled and normalized via softmax. This results in a weighted sum of Value vectors, allowing the model to dynamically focus on different parts of the sequence for each token. Multi-head attention enables the model to capture various types of relationships in parallel.

Variants and Applications:

Transformers have been adapted into several model variants:

Encoder-Only Models (e.g., BERT): Suited for tasks like classification and embedding generation.

Decoder-Only Models (e.g., GPT): Designed for text generation tasks.

Encoder-Decoder Models (e.g., T5, original Transformer): Used for sequence-to-sequence tasks such as translation and summarization.

Beyond NLP, Transformer architectures have been successfully applied to domains such as image recognition, audio processing, and even protein structure prediction.

Advantages:

Parallelization: The self-attention mechanism enables simultaneous processing of all tokens, leading to faster training and inference compared to sequential models.

Modeling Long-Range Dependencies: Self-attention allows the model to capture relationships between distant tokens effectively.

Scalability and Flexibility: The modular design facilitates scaling to larger models and adaptation to various tasks.

Technologies Used:

Programming Languages:

Python

Libraries and Frameworks:

PyTorch

Streamlit

Methodology:

LLM Fine-tuning:

Large language models (LLMs) like GPT-2, Pythia, and GPT-Neo have revolutionized natural language processing (NLP) by enabling task-specific adaptations through fine-tuning. This approach leverages pre-trained knowledge to achieve superior performance, efficiency, and practicality compared to training transformers from scratch. In this project, we compared the performance of training a transformer from scratch and using fine-tuned pre-trained models, which considerably gave a better performance due to the above reason.

Fine-tuning pre-trained LLMs significantly reduces computational demands. Training transformers from scratch requires exhaustive resources: for example, GPT-3’s training consumed thousands of GPUs and millions of dollars. In contrast, fine-tuning adjusts only a subset of parameters, often requiring 10–100x less compute. For instance, fine-tuning GPT-2 on a single GPU can yield task-specific results in hours, whereas training a comparable model from scratch would demand weeks.

Dataset Curation and Preprocessing:

Sourcing Science Fiction Literature:

The foundation of effective fine-tuning lies in domain-specific data. Public repositories like Project Gutenberg and Internet Archive offer extensive collections of classic sci-fi texts, while platforms like Goodreads provide metadata-rich datasets. For modern works, web scraping (with proper copyright considerations) or partnerships with publishers can yield contemporary texts.

Preprocessing Pipeline:

Text Extraction: Convert PDFs/EPUBs to plain text using tools like PDFplumber or Calibre, ensuring preservation of paragraph structure.

Data (Text) Cleaning:

- Remove non-narrative content (tables, footnotes, publisher metadata).

- Standardize punctuation and correct OCR errors in older scans.

- Chunking: Split texts into 512-token segments using sentence-aware splitting to maintain contextual coherence8.

Metadata Integration: Augment text chunks with author, publication year, and subgenre labels (e.g., "cyberpunk," "space opera") from sources like the Goodreads Book Datasets.

Parameter-Efficient Fine-Tuning:

To manage computational costs:

1. LoRA (Low-Rank Adaptation):

LoRA (Low-Rank Adaptation) is a parameter-efficient fine-tuning technique that injects trainable low-rank decomposition matrices into a model's attention layers, enabling task-specific adaptation without modifying the original pre-trained weights. By freezing the base model and training only these injected matrices, LoRA reduces the number of trainable parameters by 90–99% compared to full fine-tuning while retaining 97% of the performance. This approach eliminates inference latency since the adapter matrices can be merged with the base model post-training, making it practical for deploying multiple specialized models from a single pre-trained architecture. For example, GPT-3 175B fine-tuned with LoRA required 10,000x fewer trainable parameters and 3x less GPU memory than conventional methods.

2. Gradient Checkpointing:

Gradient Checkpointing optimizes memory usage during backpropagation by strategically recomputing intermediate activations rather than storing them all. This technique reduces activation memory consumption from O(n) to O(√n) for n-layer networks, enabling the training of deeper models or larger batches within fixed hardware constraints. The trade-off involves a 20–25% increase in computation time due to duplicated forward passes in checkpointed segments, but the memory savings often justify this cost when working with resource-limited systems. Modern implementations in frameworks like PyTorch automate this process through selective activation storage and on-demand recomputation during the backward pass. When combined with LoRA, these techniques enable efficient adaptation of billion-parameter models on consumer-grade GPUs.

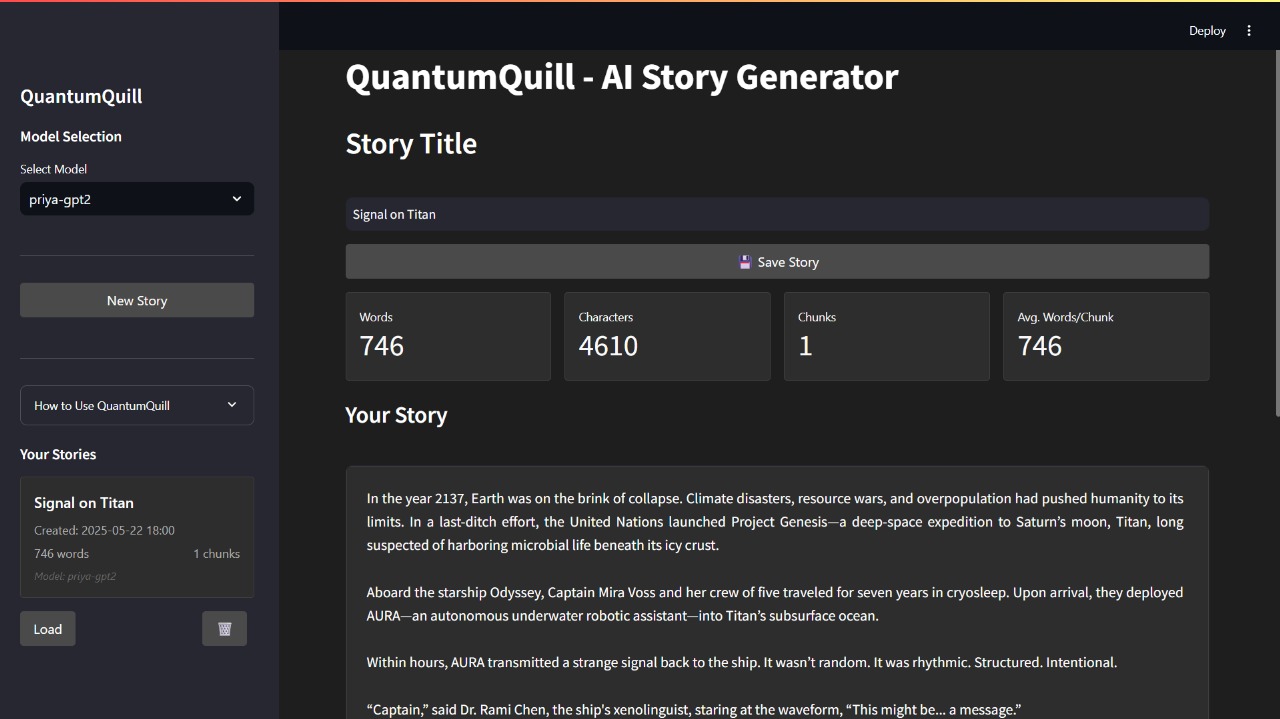

Results:

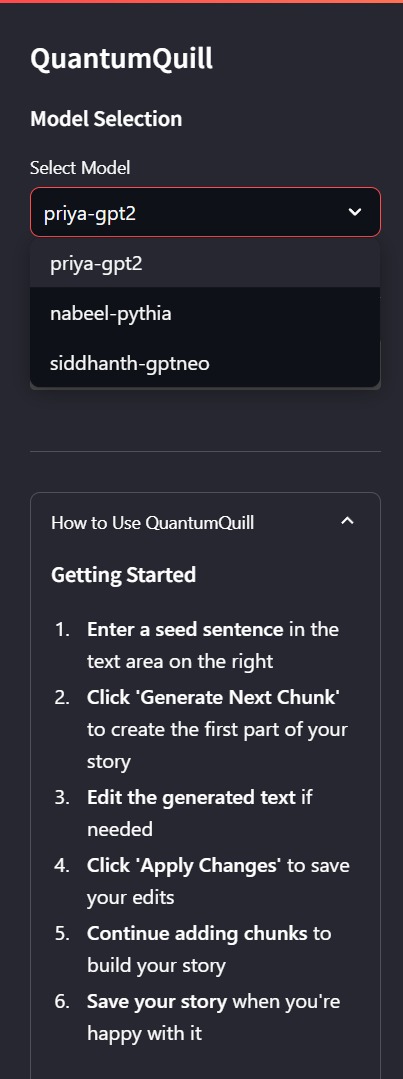

We developed an interactive web application using the Streamlit framework, where you can choose the model of your liking, which takes in an input prompt, and generates a science fiction story. We also gave an option to edit the output story and further generate it using the final generated story with the user changes.

We can choose from various fine-tuned LLMs for the story generation.

These are some of the results that we got from the fine-tuned LLMs.

Prompt: It was a cold day on Mars.

Here are the generated stories by the fine-tuned LLMs.

GPT-2:

It was a cold day on Mars. The sun went up and down and disappeared, and in the evening it sank again, to zero brightness. No sound came from that direction." He paused for breath of air; his eyes were fixed upon what he saw. "The moon is full dark--" His voice reached out toward them as if with an instinctive expression rather than a gesture which could be felt by the sailor. As he spoke, they glanced at each other, but nothing of their own. And then they turned away from him, as though in surprise. He had looked into space before. He had no idea what was behind the blackness beyond. What was going on? Was there something out here, somewhere in outer space? There was some other thing, too! But it was clear that he was not alone now or ever among the men who stood in front—it seemed impossible, even impossible after all this time.... But the thing was already gone. His face was very pale indeed. There must have been something like tears in its eyes, or blood--he could never have seen them. Then he opened his mouth. All was dark. For a moment he stared back at us. They walked about him. Some thought he might faint. But, suddenly enough almost all these thoughts vanished. This silence was broken. Nothing could stop them except themselves. Their faces became even more confused. How did we know what was happening? What had happened to me? And how long had I lived? The only answer was death. We had lost our sense so fast that our eyes had become accustomed once more towards those nearest the horizon above ground where the great masses rose. It would seem that the sky was gone, that I should see nothing, though the moon was still a little blue. Yet the whole world was in darkness. One of the strange things that had happened had frightened me. Something, perhaps, was approaching us, a strange figure. A man, whom he had met several times during the night, he must certainly remember, had come forward. If that person would have come across the edge one of them would have passed through the darkness, leaving the sky empty forever. Whatever had been outside was no longer visible. At first he thought that his sight might get out of his mind, for he knew that this man was nearer the horizon. After a while he realized it could not be, because

Pythia:

It was a cold day on Mars. In fact, we may even be having this conversation now! “We have no idea where it is.” You are talking about space exploration? Well then you do not know what to say or think because there’s nothing here that doesn't belong in your imagination and can never return back home for ever… but let me just tell ya, as I said before (when making some sense) “the only thing anyone knows with certainty at present”… So far so good… And hey, if it turns out to be true…. We will finally get our hands dirty together again sometime soon – when all of humanity has been destroyed by alien invaders!!! Yup... That's right—all humans who had one more breath than us died off -and they were still around long after their bodies decayed forever from Earthly trauma!! They really must realize how much time these aliens would waste trying to figure things out ….I'm sure they'll come up with something eventually —it won't take too many generations yet-but once those first colonists arrive aboard a ship somewhere between Jupiter/Uranus & Saturn /Saturn ...they're gonna start finding new life everywhere else.... All kinds of interesting discoveries await them !..They definitely want kids . ..But don't hold yourself against science fiction writers' imaginations ;-) The truth ain 't always plain sailing either way :-( But my friend , "donot trust any scientist" goes down easy also ....Anyway, whatever happens next might very well turn into reality sooner rather than later ~

GPTNEO:

A Martian day. The Martian surface was warm and moist and cool. Marsians were moving slowly along the surface, making their way slowly up the Martian hillside, picking up where they would have found them had they known the temperature was between 35,000 and 50,600 Fahrenheit. At the time it was colder than it should be. It would be another Martian year if it had been warmer, and it would still be a Martian Year. Some of the warmer months were spent on the planet Mars, some spent there, occasionally on other occasions. There were no hot days or cold ones, just the usual cold months. Most of them ended when a Marsian came along, followed by another, later called Diggory. He was not a big fan of oldfashioned dating. Dogs were a rarity in the vicinity of Mars--a fact that made it alloys an explanation. As it is, the year Dog wasn't mentioned in some past publication, it must have been another example of how far the average German was from being a naturalized US citizen. Also, Doolan was an odd name, based on his Germanic features, not because he was unusual, but to avoid confusion. When asked what he thought he might find there on Earth he replied, "I don't know." Now that we know more about the seasons and atmospheric conditions on this planet, we can better appreciate the mood on that cold Martian night. On this particular night the Marsia was calm and deserted. No one was around, so the men were working through their wits. They had found a small space station on a distant Martian planet called Inner Mars that they were going to explore. InnerMars was on an extremely cold, wet Martian plateau, with a few Martian rock formations that were difficult to spot, as well as several Martian blotches. This was the only station of its kind in Mars and the first place they had to work was at the station's base, an old man's home base. Not far from the Station, in a rock formation that looked like it could be reached by a long way. From the base they could see that the plateau was very steep and very narrow, giving the impression that it faced inward. "Oh, that!" the man cried. Well, he said, now that I think of it, this is where we found this station. What he saw made for a interesting observation, especially when it came to the level of rock. That most of

Conclusion:

QuantumQuill demonstrates that transformer-based large language models, when properly fine-tuned and guided by structured prompts, can generate science fiction stories that are coherent, creative, and genre-appropriate. By leveraging advanced architectures like GPT-3.5 and Mistral-7B, and optimizing them with techniques such as Low-Rank Adaptation (LoRA) and targeted prompt engineering, we achieved high genre consistency and user satisfaction. The system successfully captures key elements of science fiction, such as imaginative world-building, complex character arcs, and speculative technology, while maintaining narrative flow and engagement. Our comparative analysis showed that while larger models like GPT-3.5 excel at expansive storytelling, smaller, more efficient models like Mistral-7B can deliver competitive results with proper tuning. The project also highlighted the importance of curated datasets and robust evaluation metrics to ensure both creativity and coherence in generated stories.

Future Scope:

Multimodal Storytelling:

Integrate image generation models (e.g., Stable Diffusion) to produce illustrations, concept art, or comic panels alongside the text, creating a richer and more immersive storytelling experience.

Interactive & Collaborative Writing:

Develop features that allow users to interact with the model in real time, co-create stories, suggest plot directions, or edit generated content, making the platform a true partner in creative writing.

Expanded Genre Support:

Extend the system to support other genres (fantasy, mystery, historical fiction) by fine-tuning on relevant datasets and developing genre-specific prompt templates.

Automated Quality Evaluation:

Develop automated metrics and feedback systems for evaluating narrative coherence, originality, and reader engagement, reducing reliance on manual review.

Open-Source Community & API Integration:

Release the platform as an open-source toolkit and provide APIs for integration with writing apps, educational tools, or entertainment platforms, fostering community-driven innovation and broader adoption.

References:

Fine-tuning and Utilization Methods of Domain-specific LLMs

Preprocessing Steps for Natural Language Processing (NLP)

Github Repository:

The source code can be found here.

Team Members:

Mentors:

Atharva Atul Rege

H S Jayanth

Mentees:

Aahan Wargane

Priyadarshini S

Siddanth Saha

Umar Farooq Nadaf

Nabeel Rizvi

Report Information

Team Members

Team Members

Report Details

Created: May 23, 2025, 12:30 a.m.

Approved by: Vishal Kamath [CompSoc]

Approval date: May 24, 2025, 11:57 p.m.

Report Details

Created: May 23, 2025, 12:30 a.m.

Approved by: Vishal Kamath [CompSoc]

Approval date: May 24, 2025, 11:57 p.m.