UAV Obstacle Collision Avoidance using Deep Reinforcement Learning (TD3)

Abstract

Abstract

Mentors:

Saddala Reddy Rahul(221ME344)

Kandula Gnaneshwar (221IT035)

Mentees:

Nallola Harshavardhan Goud(231ME330)

Asrith Singampalli(231EC110)

Objective:

To develop an intelligent UAV system that can detect and autonomously avoid obstacles in real time using sensor fusion and deep reinforcement learning (TD3).

Problem Statement:

Traditional UAV navigation systems, which rely on static rule-based logic, struggle in dynamic environments. There's a pressing need for a self-learning system that enables UAVs to perceive surroundings and navigate safely without human intervention.

Proposed Solution:

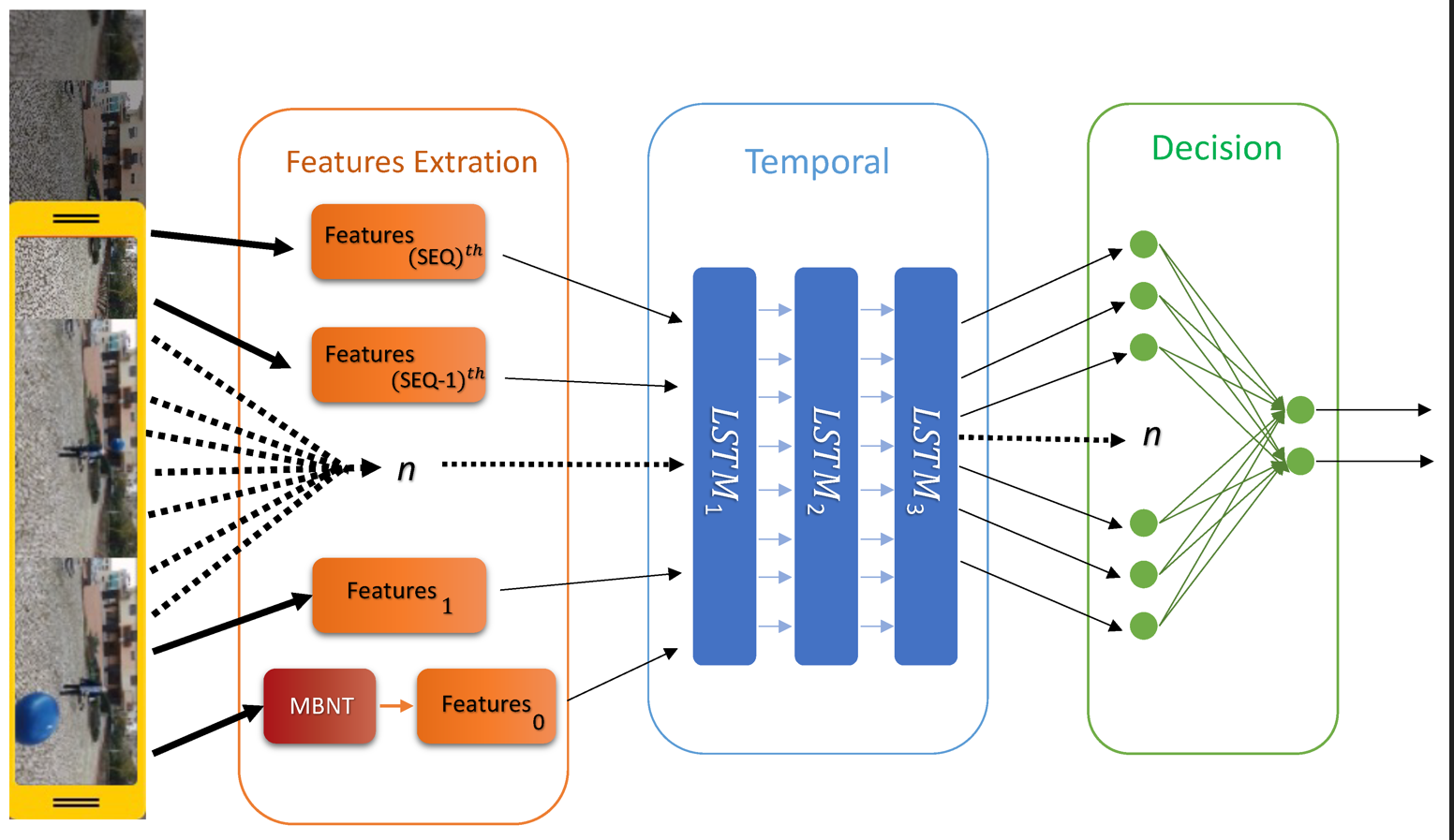

We implemented a two-stage solution:

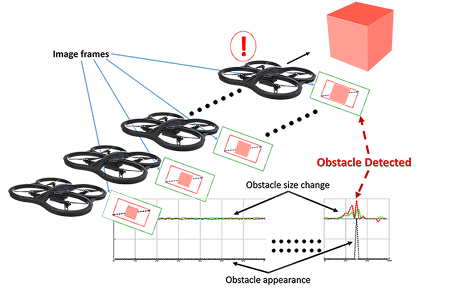

1. A Convolutional Neural Network (CNN) processes real-time RGB camera and LiDAR data to detect obstacles.

2. A Twin Delayed Deep Deterministic Policy Gradient (TD3) reinforcement learning algorithm controls UAV navigation. TD3 enables the UAV to learn optimal flight paths while minimizing collisions in dynamic environments by interacting with the environment and learning from reward feedback.

Architecture & Workflow:

1. Sensor Input: RGB Camera + LiDAR (Simulated)

2. Preprocessing: Image normalization and point cloud filtering

3. Obstacle Detection:CNN classifies environment into navigable and non-navigable zones

4. Reinforcement Learning Agent (TD3):

- Receives state input from CNN

- Outputs continuous control actions (thrust, pitch, yaw)

- Learns through interaction, using delayed target networks and clipped double Q-learning for stability

5. Control Execution:Actions are sent to the UAV in CoppeliaSim for movement

Implementation Details

- Simulated environments were created in CoppeliaSim, with randomized obstacles and moving targets.

- The TD3 agent was trained using PyTorch on Google Colab, leveraging replay buffers, policy delays, and target smoothing to ensure convergence.

- State space included depth map, UAV velocity, and relative obstacle distance.

- Reward function designed to:

- Penalize collisions

- Reward smooth navigation

- Encourage distance maintenance from obstacles

Results:

- CNN Accuracy:~96% on obstacle detection in validation set

- TD3 Navigation Success Rate:

- Low-density environments: 100% obstacle avoidance

- Medium-density: ~93% success rate

- High-density: ~84% success rate, with adaptive rerouting observed

- Collision Reduction: ~85% compared to rule-based baselines

- Training Time: ~5 hours for TD3 agent to converge

- Stability: TD3 exhibited smoother control actions and less oscillatory motion than DDPG or PPO in identical environments

Challenges Faced:

- Synchronizing real-time sensor feed with inference

- Designing a stable reward function for training TD3

- Simulating realistic dynamic obstacles

- Optimizing the model to run on limited compute (if deployed on onboard hardware)

Future Scope:

- Real-world testing using physical UAVs

- Extend the system to swarm UAV coordination

- Enhance policy robustness with domain randomization

- Integrate multi-agent reinforcement learning for collaborative navigation

Conclusion:

The project demonstrates a deep reinforcement learning-based framework using TD3 and CNNs for effective obstacle detection and avoidance in UAVs. This solution outperforms traditional control systems in both success rate and control smoothness, establishing a strong foundation for intelligent and autonomous aerial navigation.

References:

1. Kim, H., & Park, J. (2020). Real-time UAV navigation using monocular vision and deep reinforcement learning. Sensors, 20(5), 1323.

2. Gao, Y., Xu, X., & Lin, Z. (2019). Obstacle avoidance for quadrotors with deep learning. IEEE Access, 7, 76132–76141.

3. Fujimoto, S., Hoof, H., & Meger, D. (2018). Addressing Function Approximation Error in Actor-Critic Methods. arXiv: TD3 paper.

4. Bhatia, R., & Kumar, V. (2021). Learning-based real-time obstacle detection for autonomous UAVs. International Journal of Robotics and Automation.

Report Information

Team Members

Team Members

Report Details

Created: April 7, 2025, 5:52 p.m.

Approved by: Joel Jojo Painuthara [Diode]

Approval date: None

Report Details

Created: April 7, 2025, 5:52 p.m.

Approved by: Joel Jojo Painuthara [Diode]

Approval date: None